It’s now time to build upon our review of Rhythm, Structure, Instrumentation, Emotional Expressiveness, Originality, Genre Conventions, Lyrics, Harmony and Melody. How can we can use Machine Learning techniques to help us weave together the music styles and forms of our two artists, Theta and Pi?

Any machine learning exercise requires us to extract they key features necessary for training models. Here are some key musical features we could extract to combine our two different styles of music:

Rhythm patterns - Extract the rhythmic styles and drum patterns from each genre using techniques like beat tracking, onset detection, and rhythm quantification. Identify common beats, tempo, syncopation, swing vs straight feel etc. Then generate new composite rhythms blending genres.

Melodic contours - Analyse the shape and melodic intervals of vocal lines and instrumental melodies in each genre. Capture distinctive melodic motifs, ornamentations, and phrasing. Synthesize new melodies that fuse characteristic melodic elements from each style.

Harmony and chords - Extract the chord progressions and harmonic patterns using chord recognition algorithms. Identify differences in harmony, modulations, and chord voicings between genres. Generate blended chord progressions that transition smoothly between the two harmonic languages.

Timbre and instrumentation - Analyse the instrumentation and sound palette through spectrogram analysis, onset detection etc. Extract the tonal qualities that define each genre, like synth textures, guitar tones, orchestration. Recreate these tones using sound synthesis and appropriately integrate the instruments.

Song structure - Understand the typical song forms, section lengths, repetition patterns using structure detection techniques. Create new structural templates combining the conventions of pop verse-chorus with the 12-bar blues form for instance.

Lyrics - Use NLP models to analyse the vocabulary, language styles, rhyme schemes in the lyrics of each genre. Generate new lyrics that coherently fuse together the linguistic styles.

Emotional valence - Use audio emotion recognition to capture the mood, energy, and sentiment of each genre. Generate music that combines the emotive qualities in novel ways, like melancholic country overlaid with high energy EDM.

The key is identifying distinct musical traits through audio analysis and ML, quantifying similarities and differences. Then leverage generative models and algorithms to seamlessly integrate characteristics in musically and emotionally meaningful ways.

Tools

We can make use of some general purpose tools to extract these features.

Librosa is a python package for music and audio analysis. It provides the building blocks necessary to create music information retrieval systems.

This can be used to extract features from music as well as visualise these features via wave forms and spectrographs.

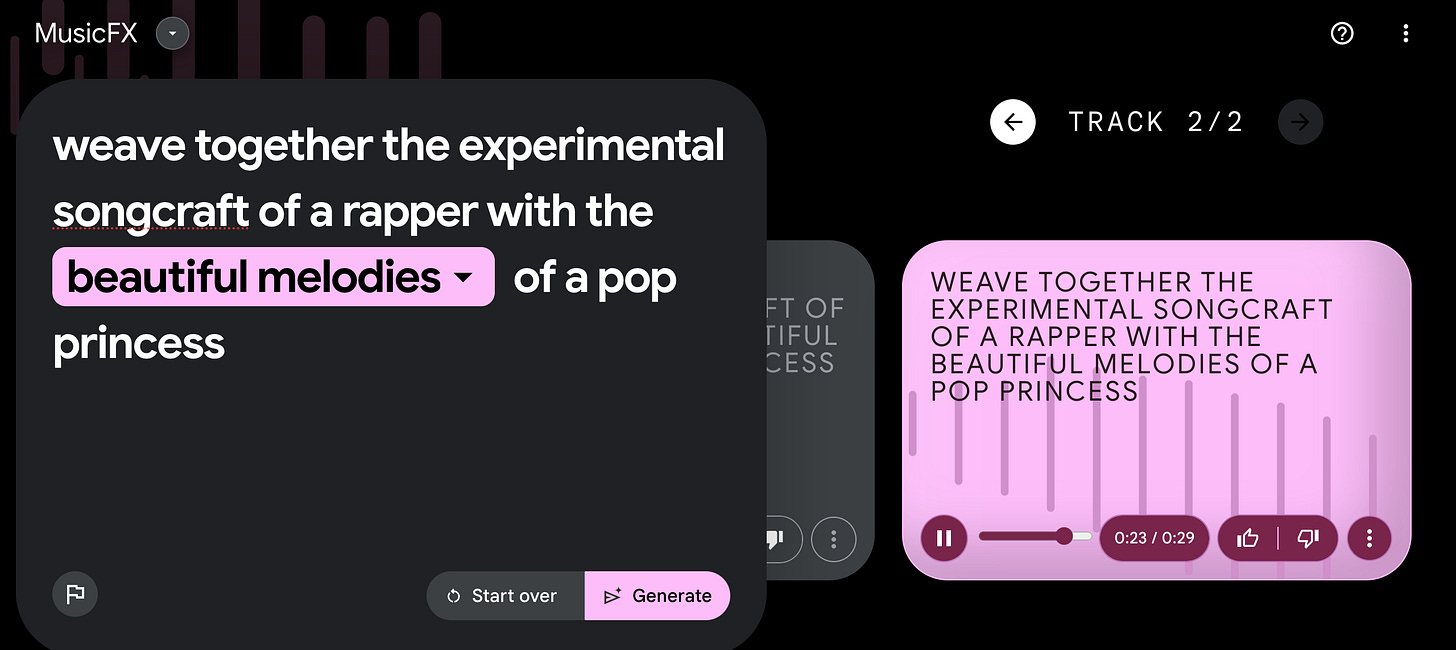

Google’s AI Test Kitchen features a powerful Music FX tool capable of creating samples of music

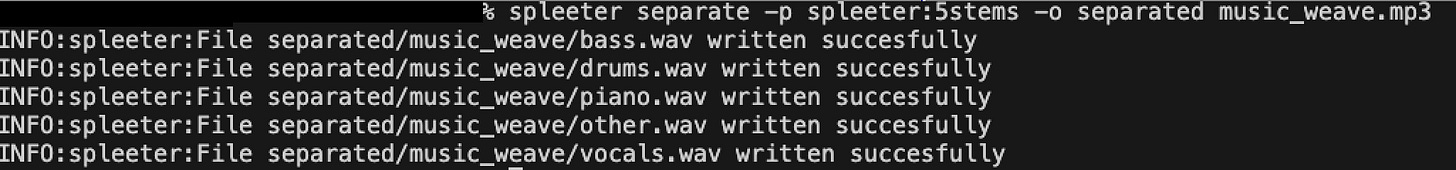

Spleeter is an open source from the French music streaming service Deezer can be used to isolate any vocal or instrumental track in an audio file

Magenta Studio is a collection of plugins which can be used to Continue, Drummify, Generate, Groove or Interpolate music

We can use these tools to achieve our goal of creating some beautiful music in collaboration with Tool AI.